Bucket Transform

In scenarios where the raw data is in a more "coarse" bucket than the timeline being used to display the data, Bucket Transforms can be used to distribute and align the data.

Imagine a scenario where the raw data is WEEKLY, but we want to show that data in a DAILY timeline. We need a way to transform the WEEKLY data, disaggregating it into multiple days. It might make sense to distribute this equally across all days in the week, or it might make more sense to put the full WEEKLY quantity on MONDAY with all other values or zero, or any other number of variations are also possibly correct depending on the business requirement.

For these types of scenarios, we specify the SqlDefBucketTypeField and BucketTransform elements:

<SqlDef name="forecasts"> select request_qty, request_date, bucket_type from forecast where …</SqlDef><DM> <SqlDefName>forecasts</SqlDefName> <SqlQuantityField>request_qty</SqlQuantityField> <SqlDateField>request_date</SqlDateField> <SqlDefBucketTypeField> <FieldName>bucket_type</FieldName> </SqlDefBucketTypeField> <BucketTransform sourceBucketType="WEEKLY" transformFunction="fairShare"/></DM>In the example above, we get the bucketization of the raw data from the bucket_type column of the SQL result set using the SqlDefBucketTypeField element.

When Platform attempts to render this, it checks the bucketization of the buckets into which this data must be placed for display. If the DM is of a finer grain (e.g. DAILY) than the raw data (WEEKLY), then the Developer must specify a BucketTransform for disaggregating the WEEKLY values over displayed DAILY buckets. (The developer can provide the multiple BucketTransforms with different sourceBucketType to handle different types of disaggregation)

For example:

<BucketTransform sourceBucketType="WEEKLY" transformFunction="fairShare"/>Here, the faireShare transform function is used to transform WEEKLY aggregated data.

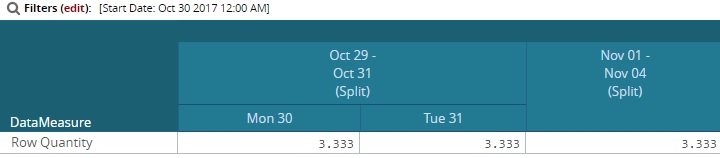

Assume for a moment the user chose to bucketize by SPLIT_WEEK. If the SQL returns a quantity of 10 on Mon Oct 30 with a bucket_type of WEEKLY, the following would be displayed on the UI:

The following standard transform functions are available in Platform:

first: Put all data into the first bucket.

last: Put all data into the last bucket.

fairShare: Split value equally into all buckets.

fairShareRoundedFavorFirst: Split value equally into all buckets by Rounding off in the first bucket.

fairShareRoundedFavorLast: Split value equally into all buckets by Rounding off in the last bucket.

byDuration: Disaggregate value in proportion with the time duration of each bucket.

byDurationRoundedFavorFirst: Disaggregate value in proportion with the time duration of each bucket. If the values are in fractions then rounding off the value and added the fractions into the first bucket.

byDurationRoundedFavorLast: Disaggregate value in proportion with the time duration of each bucket. If the values are in fractions then rounding off the value and added the fractions into the last bucket.

You can create your own transform function by implementing com.onenetwork.platform.data.tlv.BucketTransformer and provide its fully-qualified classname in the transformFunction attribute

For example:

<BucketTransform sourceBucketType="WEEKLY" transformFunction="com.book.tlv.MyBucketTransform"/>